Bonsoir

This mdBook contains notes from my self-study of binary exploitation and

reverse-engineering. If you have any questions about the contents within, you

can find my contact information on my website.

Reverse engineering

In this section, you'll find my notes on reverse engineering. This includes discussions on the following topics:

- Static reverse engineering

- Dynamic reverse engineering

- Instrumentation

- Fuzzing

- Symbolic execution

- Concolic execution

- Binary diffing

- Binary diffing tools

Static reverse engineering

Reverse engineers use static reverse engineering to inspect programs at rest, meaning not loaded into memory or currently executing. Reverse engineers utilize a series of tools to statically inspect a program in order to derive its purpose and functionality. Starting at the shallow end of analysis, the following tools are useful for static reverse engineering:

strings- the strings utility outputs all printable character sequences that are at least 4 characters long for a given file. This tool is useful for reverse engineers as it provides an initial peek at what functionality might exist within the target program.file- the file utility gives a best-effort determination of what type of file the reverse engineer is inspecting.filelooks for common ASCII strings, but also checks a file to see if it contains data structured according to known file formats like ELFs.PEiD- a Windows tool that's useful for identifying the compiler used to build a Windows PE and any tools used to obfuscate the PE being inspected.

Some tools that provide a little more sophistication for static reverse engineering are as follows:

nm- this utility lists the symbols in a provided object file or executable . Usingnmon object files just shows an object file's exported global varibles and symbols - an executable provides similar output, however, external functions that the program links against will also be present.ldd- list dynamic dependencies, this will list the libraries a dynamically linked executable uses / expects in order to implement its functionality.objdump- an extremely versatile utility for ELF inspection, this program can provide a reverse engineer the following information about a program:- Program section headers - a summary of the information for each section in

the program - e.g.:

.text,.rodata,.dynamic,.got,.got.plt, etc. - Private headers - program memory layout information and other information

required by the loader, e.g. a list of required libraries - produces similar

output to

ldd. - Debugging information

- Symbol information

- Disassembly listing -

objdumpperforms a linear sweep disassembly of the sections of the file marked as code.objdumpoutputs a text file of this listing, commonly known as adead listing.

- Program section headers - a summary of the information for each section in

the program - e.g.:

c++filt- a tool that understands and deciphers the symbol name mangling scheme of different compilers for C++ applications. Compilers mangle symbol names with some standardized formatting in order to avoid symbol collisions of symbols within different namespaces.c++filtnot only de-mangles the symbol output of tools likenmong++compiled programs, but is also able to identify and fix paramters passed to previously mangled symbols.

Finally, at the deep end of static reverse engineering tools:

ndisasm- a stream disassembler, this utility doesn't require the data inspected to be a valid program. It just takes a stream of bytes and attempts to accurately disassemble the bytes to provide readable assembly code. Of course you'll have to providendisasmwith the suspected target architecture and pointer size (32bit or 64bit).IDAPro- created by HexRays, IDAPro is a static reverse engineering tool that conducts disassembly and decompliation of a target program and uses a proprietary technology called FLIRT (Fast Library Identification and Recognition Technology) for standard library function recognition.Ghidra- a crowd favorite, Ghidra is a static reverse engineering tool that disassembles and decompiles a target program using a recursive descent algorithm. Without going too in depth into the differences between linear sweep and recursive descent disassembly, Ghidra follows branches , functions calls, returns, etc. when disassembling a target binary. This is in contrast to objump which disassembles blocks of bytes which can be assumed to be valid instructions. Recursive descent disassembly provides the reverse engineer with more context and usually more accurate results for disassembled programs.Binary Ninja- created by Vector35 and similar to both IDAPRo and Ghidra, Binary Ninja is pretty good at providing a high level intermediate language representation of a target program. Like IDAPro and Ghidra, Binary Ninja allows reverse engineers to program scripts against its API - this is usually done to fix known issues with disassembly or provide general quality of life improvements.

Pros of Static Reverse Engineering

Reverse engineers can utilize static reverse engineering to gather information about a target binary without having to load and execute that binary in memory. This is most useful when a reverse engineer needs to gather information about a malicious program but wants to avoid having that malicious program execute its functionality on the reverse engineer's system. Static reverse engineering is also useful when the reverse engineer does not have the requisite system(s) to load and execute a target for dynamic analysis. This scenario is common when inspecting programs extracted from embedded devices or real-time operating systems. These programs are usually compiled for a different architecture than the reverse engineer's machine and expect special / custom devices to be present in order to implement their functionalities. Static reverse engineering allows analysts the time and ability to deeply inspect a target program and, with tools like Ghidra and IDAPro, an analyst can generate and analyze control flow graphs (CFG)s, data dependencies, decompiled code, etc. Finally, reverse engineers can easily collaborate when conducting static reverse engineering. The Ghidra Server is a prime example of this, providing change control for a repository of programs as analysts comment, label, and update listings.

Cons of Static Reverse Engineering

Try as we might, some things can't be answered by static reverse engineering. Often times, if the author of a program intends upon protecting their intellectual property - or if the author is a malware developer - programs can come packed / compressed. These usually contain a decoder stub that will unpack the real program to be executed into the process memory. A number of unpacking programs exist that help reverse engineers get back to statically analyzing their targets, however, it's possible a paranoid malware author could invent their own packer / unpacker with custom encryption, etc.

This increase in sophistication requires reverse engineers to conduct deeper inspection on custom unpacking algorithms written by malware authors. Luckily, tools like Ghidra, Binary Ninja, and IDAPro provide scripting interfaces that allow reverse engineers to write and execute custom scripts. This enables reverse engineers with the ability to operate on the disassembly programatically, making the unpacking of custom packed malware more feasible.

Dynamic reverse engineering

Dynamic reverse engineering is utilized by analysts to derive conclusions that can't be discovered with static reverse engineering. In order to conduct dynamic reverse engineering, analysts load and execute the program of inspection (POI) into memory in a controlled environment. Analysts usually attempt to recreate the exact environment the POI normally executes in order to remove any variables that might cause differences for exploit development. The following are some technologies and techniques used to accomplish dynamic reverse engineering:

- Containerization - analysts can place POIs into containers using technologies like Docker. This allows analysts to control the POI's use of system resources and software packages. Containerization is useful in identifying a POI's dependencies and enables the recreation of a POI's exploitable environment. Containers are a excellent choice for quickly creating lightweight, virtualized environments for dynamic reverse engineering and exploit development.

- Virtualization - virtualization technologies like VMware, VirtualBox, and QEMU allow analysts to create an entire Guest Operating System running the POI's intended Operating System, managed by a hypervisor on the Host Operating System . These virtualization technologies are useful for dynamic reverse engineering because the analyst can control the Guest Operating System's resources, e.g. network cards, graphics cards, host dynamic memory, disk memory, etc. Full virtualization also makes virtualization entirely transparent to the Guest Operating System - all system calls are translated by the hypervisor to the host machine without the Guest OS's awareness. This is useful for the inspection of POI's with more sophisticated virtualization detection mechanisms.

- QEMU user-mode emulation - this project recevies its own bullet due to its

usefulness for dynamic reverse engineering. QEMU's user-mode emulation through

programs found in the

qemu-user-staticpackage allows analysts to load and execute programs compiled for different architectures. Not only that, it provides an easy interface for changing the POIs environment variables, library loading behavior, system call tracing, and remote debugging. Knowledge and use of QEMU's user-mode emulation is a must for analysts attempting to reverse engineer embedded software. - Function call hooking - during dynamic reverse engineering, sometimes programs execute function calls to external libraries expecting specific responses in order to continue execution. A majority of the time, analysts are unable to recreate the correct environment in order to correctly service these external library function calls, thus the program aborts or exits before executing any interesting behavior. Analysts combat this by writing hooks in C and compiling shared objects for dynamically-linked POIs, intercepting a POI's external library function calls and returning correct values so that the program can continue execution without failure.

- Debugging - with utilities like

gdborwindbg, analysts can examine the process stack, heap, and registers while a POI executes in process memory. This deep inspection allows analysts to truly understand how a POI behaves, what return values it expects from internal and external subroutines, and also opens a window to extracting packed / encrypted programs from process memory after decoding. - Symbolic execution - symbolic execution, sometimes known as symbolic analysis, is a technique used to derive the inputs to a program that satisfies a constraint for a specific path of execution. A symbolic execution engine will analyze a specific slice of the program and determine what variables are used to reach a target address. From here, a symbolic execution engine can conduct "concolic execution", a term used to describe a mix of symbolic and concrete execution wherein the engine determines the satisfiability contraints for a path of execution and tests different inputs to inspect their side effects. Symbolic analysis has various security applications and use cases for reverse engineers, enabling them with the ability to find vulnerabilities, generate exploits, and conduct full code coverage for malware specimens.

Pros of Dynamic Reverse Engineering

Reverse engineers can utilize dynamic reverse engineering to answer questions that can't be satisfied by static reverse engineering. In addition, dynamic reverse engineering allows analysts to understand the behavior of programs that have been packed or encrypted, allowing them to unpack or decrypt themselves prior to being loaded into memory by the decompression / decoder stub of the program. Using this technique, analysts can dump the program from process memory onto the disk for further static analysis.

During dynamic reverse engineering, analysts can gradually provide POIs with necessary resources to inspect behavioral changes. This technique is most commonly used with programs that receive command and control (C2) communications from a source on the Internet - allowing POIs to have access to a network card within a controlled environment.

Dynamic reverse engineering allows analysts to understand the true intent of a POI, if it wasn't already readily apparent during static analysis. Because most analysis occurs within a virtual environment, an analyst can execute the program without risk to lab infrastructure or information - this is useful for the inspection of malware like ransomware, wipers, worms, etc.

Cons of Dynamic Reverse Engineering

There will always be inherent risk in executing code that you didn't write yourself. It's entirely possible that a POI utilizes some undisclosed jailbreak / sandbox escape that could cause harm to the analyst's workstation. In addition, most malware today comes with functionality to detect virtualization or debugging techniques. Some malware even goes so far as to report an analyst executing the malware within a sandbox environment - alerting the malware author to update or change the program distribution to hamper reverse engineering efforts.

Despite all best efforts, sometimes it will be impossible to recreate the POIs operating environment. Using dynamic reverse engineering, analysts have to make assumptions that might not always be correct - this can cause analysts to focus on unimportant features of the POI or paths of execution that may never take place in the real environment.

Virtualization for dynamic reverse engineering also has its limitations. A prime example of this is the Spectre security vulnerability. [1] This vulnerability affects modern processors that perform branch prediction - attackers can leverage the side effects of speculative execution and branch misprediction to reveal private data. It would be infeasible for a reverse engineer to inspect a malware specimen exploiting this vulnerability from a virtualized environment. Likely, the reverse engineer would have to conduct debugging and analysis on a physical processor.

References

Dumb fuzzing

Foreword

Before we begin our discussion on fuzzing techniques, note that the terms and definitions used by different fuzzing publications, tools, etc. are not congruent. Currently, there doesn't seem to be a standard language when discussing ideas and concepts related to fuzzing - every blog or article is using terms and definitions differently.

Because of this, all of the language we will use for this and future discussions on fuzzing will be derived from [1]. It's an excellent resource that pulls together the most popular fuzzing tools and publications and attempts to standardize our understanding of the fuzzing process as well as all of the terms and techniques associated with fuzzing.

Model-less fuzzing (dumb fuzzing)

Fuzzers are used to generate input for programs under test (PUTs), attempting to find inputs that crash the program - a crash being some sort of unrecoverable state like a segmentation fault. Being programmers ourselves, we understand that most programs have vulnerabilities in only very specifc sections of code, requiring specific inputs to traverse an execution path that reaches the vulnerable code.

Model-less fuzzers, or dumb fuzzers, generate input data for PUTs without any knowledge of the structure required for the data expected by the PUT. A good example provided by [1] is the fuzzing of an MP3 player application. MP3 files have a particular structure that needs to be met and, if the MP3 player application is provided an invalid file, it will exit without executing further code. In this example, unless the model-less fuzzer is provided with some initial seed data, the model-less fuzzer will be unable to traverse further execution paths until it correctly guesses the data structure expected by the MP3 player application - this is an infeasible approach to exploitation.

Model-less fuzzers are mutation-based, all of the input they generate for PUTs is based off of a set of seeds provided by the researcher. Seeds are input data that can be correctly ingested by the PUT and allows the fuzzer to reach sections of code that are usually executed after the input data is validated. Seeds are usually derived from real-world application use. Once the model-less fuzzer is provided with enough seeds, the fuzzer will generate mutations of the seeds as test cases for the PUT.

Bit-Flipping

Bit-flipping is a common technique used by model-less fuzzers to mutate

seeds and generate test cases for PUTs. The fuzzer flips a number of bits in

the seed, whether it be random or configurable is determined by the

implementation of the fuzzer. Bit-flipping, model-less fuzzers that allow

user-configuration usually employ a configuration parameter called the

mutation ratio, a ratio defined as K random bits in an N-bit seed:

K/N.

Arithmetic Mutation

Arithmetic mutation is a mutation operation where a selected byte sequence

is treated as an integer and simple arithmetic is performed on it by the

fuzzer. For instance, AFL selects a 4-byte value from a seed and then replaces

the integer within that seed with a value +/- some random, small number r.

Block-based mutation

Block-based mutation involves:

- Inserting a randomly generated block of bytes into a random position in a seed.

- Deleting a randomly selected block of bytes from a seed.

- Replacing a randomly selected block of bytes with a random value in a seed.

- Generating random permutations for the order of blocks in a seed.

- Resizing seeds by appending random blocks.

- Randomly switching blocks of different seeds.

Dictionary-based mutation

Dictionary-based mutation involves a fuzzer using a set of pre-defined

values with significant semantic weight, for example 0,-1, or format

strings, for mutation.

Feedback and evaluation

So how does a fuzzer detect the discovery of an interesting input? Model-based and model-less fuzzers both leverage the use of bug oracles for input evaluation. A bug oracle is a part of the fuzzer that determines whether a given execution of the PUT violates a specific security policy. Usually, bug oracles are designed to detect segmentation faults but, with the implementation of sanitizers, fuzzers can also detect unsafe memory accesses, violations of control flow integrity, and other undefined behaviors.

Problems with model-less fuzzing

A practical challenge that model-less, mutation-based fuzzing faces is a program's use of checksum validation, a primary example being the use of a cyclic redundancy check (CRC). A CRC is an error-detecting code that ensures that the integrity of the data contained in the input file is preserved during transmission. If a PUT computes the checksum of an input before parsing it, it's highly likely that most of the test input provided by the fuzzer will be rejected.

In this case, model-base fuzzing of the PUT is more likely to succeed, as showcased in Experiment 2 of [2]. During the experiment, the model-based fuzzer had full knowledge of how the input data was to be structured, including how the checksum was generated. This allowed the fuzzer to achieve greater code coverage than any of the model-less, mutation-based fuzzer implementations.

References

- https://arxiv.org/pdf/1812.00140.pdf

- https://www.ise.io/wp-content/uploads/2019/11/cmiller_defcon2007.pdf

Instrumentation

Before we begin a discussion on instrumentation, let's define the different types of fuzzing because they can be categorized based upon the amount of instrumentation they leverage.

Black-box fuzzers

Black-box fuzzers use fuzzing techniques that do not see the internals of programs under test (PUTs). Black-box fuzzers make decisions upon the input/output behavior of the PUT. These types of fuzzers are colloquially called IO-driven or data-driven in the software testing community. [1]

White-box fuzzers

White-box fuzzers generate test cases by analyzing the internals of a PUT, exploring the state space of the PUT systematically. White-box fuzzing is also called dynamic symbolic execution or concolic testing (concrete + symbolic) . What this basically means is that the fuzzer leverages concrete and symbolic execution simultaneously, using concrete program states to simplify symbolic constraints. [1]

Grey-box fuzzers

Grey-box fuzzers are able to obtain some information about a PUT and its executions. In contrast with white-box fuzzers, grey-box fuzzers only perform lightweight static analysis on the PUT and gather dynamic information during program execution such as code coverage - they utilize approximate information in order to speed up the rate of testing. [1]

Instrumentation

Grey and white-box fuzzers instrument the PUT to gather feedback for each execution of the program. Black-box fuzzers don't leverage any instrumentation so they won't be discussed in this section. What is instrumentation? [1] defines two categories:

- Static program instrumentation - instrumentation of a PUT before it is executed. This is usually done at compile time on either source code or an intermediate representation. An good example is how AFL instruments every branch instruction of a PUT in order to compute branch coverage. This feedback allows AFL to choose test cases that are more likely to traverse more or newer branches of a PUT.

- Dynamic program instrumentation - instrumentation of a PUT while the program is being executed. Dynamic instrumentation provides the advantage of easily instrumenting dynamically linked libraries because the instrumentation is performed at runtime. Leveraging dynamic program instrumentation provides a fuzzer with a more complete picture of code coverage as its able to obtain information from external libraries.

So how does this aid fuzzers?

As stated earlier, instrumentation provides execution feedback to grey and white-box fuzzers, providing the fuzzers with information useful for making decisions on which seeds to prioritize for the generation of input data. Fuzzers leverage feedback from instrumented PUTs to generate statistics and track which portions of the code have been traversed and which execution paths are most likely to produce a crash.

Instrumentation also aids fuzzers through in-memory fuzzing. In-memory fuzzing is when a fuzzer only tests a portion of the PUT without respawning the process for each fuzz iteration. This is often useful for large applications that use a GUI and often need time to execute a significant amount of initialization code. The fuzzer can take a memory snapshot of the PUT right after it has been initialized and then utilize this memory snapshot to begin the fuzzing campaign. Instrumentation used in this manner increases the speed in which a fuzzer can execute test cases to find crashing input.

References

Coverage-based fuzzing

We've somewhat discussed code-coverage based fuzzing in the previous sections when talking about grey-box testing or the AFL fuzzer, but here we'll actually provide a concrete definition, some examples, and references.

Code-coverage based fuzzing is a technique that programmers and fuzzers use in order to uncover as many bugs as possible within a program. In previous sections we talked about mutation-based fuzzing and generating random input with dumb fuzzers in an attempt to generate a crash, but those techniques aren't super helpful if the program never uses all of its code to evaluate our input. Code-coverage based fuzzing solves this argument, the more code we execute within a program the more likely we are to find a bug.

Examples and resources

The Fuzzing Book

First I'll start with the The Fuzzing Book's article on code-coverage based fuzzing. This article explains code-coverage based fuzzing in depth and provides examples for Python and C applications. The article conducts code-coverage based fuzzing for a CGI decoding application, an application that takes URL encoded strings and reverts them to their original text.

In this article, the author also takes the time to make the distinction between black-box fuzzing and white-box fuzzing, and how white-box fuzzing enables code-coverage based fuzzing. Without instrumentation, we aren't able to conduct code-coverage based fuzzing because the fuzzer doesn't have the ability to capture which lines of code have been executed by the program under test (PUT). With instrumentation, however, we can conduct code-coverage based fuzzing for both program statements and branch instructions.

In this particular example, the author uses statement-based coverage to acquire code-coverage fuzzing statistics. Because the decoder application is so small, the author only needed to run ~40-60 fuzzing iterations to cover all lines of code in the application.

Fuzzing with Code Coverage by Example

This presentation given by Charlie Miller at Toorcon 2007 provides some good historical context and expands upon the advancements made towards mutation-based, generation-based, and code-coverage based fuzzing. It's a thorough explanation with some great examples and pratical exercises for code-coverage based fuzzing. The most interesting part is its mention of evolutionary algorithms for code-coverage based fuzzing, and how mutation-based , model-less fuzzers can utilize the feedback provided by code-coverage based fuzzing to select more "fit" inputs.

Fuzzing by Suman Jana

This presentation by Suman Jana from Columbia University in the City of New York, provides a more recent synopsis of code-coverage based fuzzing. This presentation covers one of the more popular fuzzers in the research community that uses code-coverage based fuzzing, American Fuzzy Lop (AFL). AFL is a model-less, mutation-based, grey-box fuzzer that leverages instrumentation at compile time to conduct branch-based code-coverage fuzzing. Throughout a fuzzing campaign, AFL will use its instrumentation to evaluate what branches a particular seed was able to cover. AFL attempts to generate a pool of seeds that cover as much of the program as possible, and also trims seeds from the pool to produce the minimum required input to cover sections of code.

Evolutionary Algorithms

An Evolutionary Algorithm (EA) uses biological evolution mechanisms such as mutation, recombination, and selection to maintain a seed pool of "fit" input candidates for a fuzzing campaign, and new candidates can be discovered and added to this seed pool as more data is collected. Most grey-box fuzzers leverage EAs in tandem with node or branch coverage to generate seeds, preserving seeds that discover new nodes or branches and trimming seeds until the minimum amount of input required to cover a branch is discovered. The implementations of EAs across different grey-box fuzzers varies, but they all end up achieving similar goals using this feedback mechanism. [4]

References

- https://www.fuzzingbook.org/html/Coverage.html#

- https://www.ise.io/wp-content/uploads/2019/11/cmiller_toorcon2007.pdf

- https://www.cs.columbia.edu/~suman/secure_sw_devel/fuzzing.pdf

- https://arxiv.org/pdf/1812.00140.pdf

Symbolic execution

Symbolic execution is a program analysis technique that can be used to determine what concrete values are required to cause different parts of a program to execute. It's goals are similar to code-coverage based fuzzing, however, it's different because it's not generating and mutating a bunch of concrete input and throwing it at the program until it reaches a new execution path or branch.

Symbolic execution analyzes a program and assigns symbolic values to all expressions that cause conditional branches within a program. A symbolic execution engine maintains and generates path conditions using these symbols and expressions, providing the researcher with a symbolic representation of how to reach different execution paths within the program.

Symbolic execution engines also utilize model checkers, taking the generated path conditions for each execution path and leveraging a satisifiability modulo theories (SMT) solver, or constraint solver, to calculate the concrete values that satisfy the formulas represented by each path condition. [1]

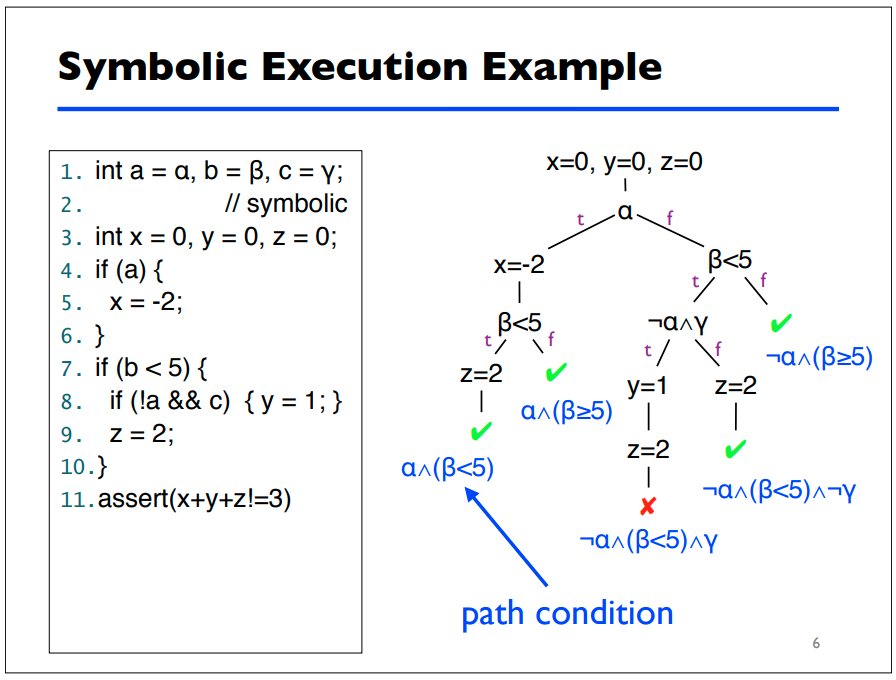

Example

Here's an example from a presentation on symbolic execution from the University of Maryland [2]:

This example provides the reader with a solid breakdown of how path conditions

are generated for different paths of execution in a small snippet of code. The

symbolic execution engine will provide to the tester what conditions need to be

true for the assert call to fail. From there, the engine can also derive

concrete values for each symbol that will cause the program to fail the

assertion.

Concolic execution

Dynamic symbolic execution or concolic execution is a form of white-box fuzzing that conducts symbolic execution while the program is being executed with concrete values. Like we discussed earlier, this form of white-box fuzzing is possible through instrumentation of the PUT. The symbolic execution engine is able to recieve feedback on the concrete input it provides to the PUT allowing it to derive symbolic constraints for different paths of execution more efficiently. The algorithm for concolic execution is actually explained pretty well in the concolic testing Wikipedia article. [3].

Applications

Symbolic execution is useful for software verification and testing because, unlike static analysis tools, symbolic execution will never produce false positives for software errors. Symbolic execution is useful for software engineering because its final product is a concrete input that will cause the program under test (PUT) to fail a specification. Various different symbolic execution engines and tools have been used across the industry by software engineers and hackers alike to find security vulnerabilities.

Limitations

There are some properties of programs that can hinder program analysis using symbolic execution. A quick listing and short description of these limitations referencing [1] can be found below:

- Memory - symbolic execution engines have a hard time handling pointers, arrays, and other complex objects like the contents of data structures stored on the heap.

- Environment - symbolic execution engines don't account for things like calls to other libraries, file system operations, etc. A symbolic execution engine could be testing path conditions that contain file operations for some static filename. Unlike the tests being conducted by the engine, the state of the file is permanent and thus, if the contents of the file are used for conditional branches or expressions, this could cause non-deterministic outcomes for different tests conducted by the engine.

- Path explosion - symbolic execution engines don't scale well when testing large programs - the number of feasible paths in a program grows exponentially with program size. The number of feasible paths tracked by an engine can also become infinite given some loop exists within the program that contains an unbounded number of iterations.

References

- https://arxiv.org/pdf/1610.00502.pdf

- https://www.cs.umd.edu/~mwh/se-tutorial/symbolic-exec.pdf

- https://en.wikipedia.org/wiki/Concolic_testing

Binary diffing

Binary diffing or patch diffing is a technique that compares two binaries derived from the same code with the goal of discovering their differences and revealing any technical details that weren't covered in the changelogs, bulletins, or patch notes for an update. Researchers use binary diffing to compare known-vulnerable binaries to post-patch binaries in an attempt to suss out how a vulnerability was patched by the author. It's common for researchers to also find a way around a discovered patch or the patch can also expose other vulnerabilities within the binary that were previously overlooked.

Vulnerabilities discovered through binary diffing are colloquially termed 1-day bugs / vulnerabilities as they are usually discovered the day after a patch is released. 1-day bugs can be used to exploit and compromise users or organizations that are slow to adopt the newest security patches.

Binary diffing is also useful for discovering security patches within entire code cores for products. In the case of this Google Project Zero article, the researcher leverages binary diffing to discover kernel memory disclosure bugs in the Windows operating system core - bugs that can be useful to defeat kernel ASLR in other exploits.

Limitations

Most of the examples online that aim to teach binary diffing use simple programs with small changes, however, in real-world scenarios binary differs do face some obstacles. Here are a few examples as listed by an Incibe-Cert article on binary diffing [2]:

- Unrelated differences - it's common that changes in the compiler or compiler optimizations can cause small differences to manifest between two different binaries. A security researcher must be able to identify when a difference between two binaries is unrelated to a patch.

- Bugfixes not related to a vulnerability - sometimes bugfixes are present in a new version of the binary that are completely unrelated to the vulnerability of interest.

- Obfuscation and anti-diffing techniques - some authors and organizations purposefully obfuscate and leverage anti-diffing techniques for patches to prevent researchers from finding vulnerabilities or reverse-engineering patches to previous vulnerabilities. This BlackHat 2009 presentation by Jeongwook Oh goes into great detail about binary diffing and various obfuscation and anti-diffing technologies.

References

- https://googleprojectzero.blogspot.com/2017/10/using-binary-diffing-to-discover.html

- https://www.incibe-cert.es/en/blog/importance-of-language-binary-diffing-and-other-stories-of-1day

- https://www.blackhat.com/presentations/bh-usa-09/OH/BHUSA09-Oh-DiffingBinaries-SLIDES.pdf

Binary diffing tools

Diaphora

Diaphora, Greek for "difference", is a binary diffing project and tool started by Joxean Koret, originally released in 2015. Diaphora is advertised as the most advanced binary diffing tool that works as an IDA Pro plugin. IDA Pro is a licensed disassembler and debugger for state-of-the-art binary code analysis.

Diaphora features all of the common binary diffing techniques such as:

- Diffing assembler.

- Diffing control flow graphs.

- Porting symbol names and comments.

- Adding manual matches.

- Similarity ratio calculation.

- Batch automation.

- Call graph matching calculation.

And it also comes with many more advanced features listed in its README.md.

An IDA Pro license comes at a hefty price, however, the contributors to

Diaphora have advertised that support for Ghidra and Binary Ninja are being

actively developed. Ghidra is a free software reverse

engineering suite of tools developed by the National Security Agency (NSA).

Binary Ninja is another licensed reversing platform developed by

Vector 35.

Bindiff

Bindiff is another binary diffing tool developed by Zynamics and works as a plugin for IDA Pro, as well. Here are some of its use cases as advertised on their web page:

- Compare binary files for x86, MIPS, ARM, PowerPC, and other architectures supported by IDA Pro.

- Identify identical and similar functions in different binaries.

- Port function names, anterior and posterior comment lines, standard comments and local names from one disassembly to the other.

- Detect and highlight changes between two variants of the same function.

It's not all about IDA Pro; As of March 1, 2020, Zynamics released Bindiff 6 which provides experimental support for Ghidra. Open-source research has been conducted to generate a plugin for these new features and a plugin called BinDiffHelper is currently available that aims to provide easy-to-use support for Bindiff 6 with Ghidra.

Radiff2

Radiff2 is Radare2's binary diffing utility. Radare2 is a rewrite from scratch of Radare in order to provide a set of libraries and tools to work with binary files. The Radare project started as a forensics tool, a scriptable command-line hexadecimal editor able to open disk files, but later added support for analyzing binaries, disassembling code, debugging programs, attaching to remote gdb servers, and so on.

Radiff2 is open-source and attempts to provide the same utility and functionality as the binary diffing tools listed above without having to cater to licensed tools like IDA Pro and Binary Ninja.

References

- https://github.com/joxeankoret/diaphora

- https://www.hex-rays.com/products/ida/

- https://ghidra-sre.org/

- https://binary.ninja/

- https://www.zynamics.com/bindiff.html

- https://github.com/ubfx/BinDiffHelper

- https://r2wiki.readthedocs.io/en/latest/tools/radiff2/

- https://github.com/radareorg/radare2

Common vulnerabilities

In this section, you'll find my notes on common vulnerabilities that you may find during reverse engineering and how to exploit them. This includes discussions on the following topics:

- Stack buffer overflows

- Heap buffer overflows

- Use after free

- Heap grooming

- Race conditions

Stack buffer overflow

The stack

Before we talk about overflowing data structures on the stack, let's define what the stack is.

The stack is a data structure with two principal operations, push and pop.

The stack follows a last in, first out (LIFO) convention meaning the

top-most element of the stack is the first to be removed from the data

structure when a pop operation occurs. Newer values are pushed to the top

of the stack and cannot be removed until succeeding values are poped from the

stack. [1]

The rest of our discussion is related to the process stack of ELF binaries. Each process has a contiguous segment of memory that is set aside to store information about the active subroutines of the program. The initial stack layout provides the process access to the command line arguments and environment used when executing the program. An example of the initial process stack can be found below [2]:

argc // argument count (int)

argv[0] // program name (char*)

argv[1] // program arguments (char*)

...

argv[argc-1]

NULL // end of arguments (void*)

env[0] // environment (char*)

...

env[n]

NULL // end of environment (void*)

The stack can be implemented to grow down (towards lower memory addresses) or

up (towards higher memory addresses). Most common stack implementations grow

downwards as data is pushed to the stack and this is what we will use for

this discussion. A register called the stack pointer is used to track

the last address of the stack, the most recent element pushed to the stack.

Many compilers also use a second register, the frame pointer, to reference

local variables and parameters passed to functions.

Stack frames

The stack is used to implement functions for programs. For each function call, a section of the stack is reserved for the function - a stack frame. Below is some example C code that we will use for the rest of our stack frame discussion:

int my_function(int a, int b) {

char buffer[32];

return a + b;

}

int main(int argc, char** argv) {

my_function(1, 2);

return 0;

}

Example assembly language output for the call to my_function() could be:

push 2 // push arguments in reverse

push 1

call my_function // push instruction pointer to stack and jump

// to beginning of my function

The above assembly code follows the cdecl calling convention, we'll also use

this calling convention for the rest of our discussion. The above assembly code

showcases how the arguments for the callee function, my_function(), are being

passed to the function by the caller, main, using the stack. The call

instruction pushes the instruction pointer onto the stack. This will be used

by a ret instruction to return to the caller. [3]

Entering my_function(), we'll see the function prologue setting up the

stack frame. Here's an example of what this would look like:

push rbp // save the frame pointer of the caller to the stack

mov rbp, rsp // set the new frame pointer

sub rsp, 64 // make space for char buffer[32]

Below is an example of what the stack frame would look like for my_function()

:

0xdeadbeefcafe0000 buffer[0] // rsp

...

0xdeadbeefcafe0040 buffer[31] // end of buffer

0xdeadbeefcafe0048 saved frame pointer // rbp

0xdeadbeefcafe0050 return address

0xdeadbeefcafe0058 a

0xdeadbeefcafe0060 b

Functions use the rbp and relative addressing to reference local variables

and parameters passed to the function.

Lastly, we have the function epilogue which reverses the actions of the prologue, restoring the caller's stack frame and returning to the caller. An example function prologue follows:

leave // mov rsp, rbp; pop rbp;

ret

The leave instruction moves the rsp back to where it was before we entered

the function, directly after the caller executed the call instruction. The

rbp is also restored so the caller can correctly access the contents of its

stack frame when it resumes execution. The ret instruction sets the program

counter to the return address now contained at the top of the stack.

[4]

Buffer overflows

Finally, we can talk about stack buffer overflows and how they can be used to hijack the execution of a process. Stack buffer overflow vulnerabilities are a child of the out-of-bounds write weakness and are a condition in which a buffer being overwritten is allocated on the stack. [5][6] Provided below is some example C code that contains a stack buffer overflow vulnerability:

#define MAX_SIZE 64

int main(int argc, char** argv) {

char buffer[MAX_SIZE] = {0};

strcpy(buffer, argv[1]);

return 0;

}

In this example, the size of argv[1] is not being checked prior to writing

its contents to the stack buffer, char buffer[MAX_SIZE]. If argv[1] is

greater than MAX_SIZE, the strcpy() operation will copy the contents of

argv[1] to buffer but will also overwrite the bytes directly after the

buffer variable on the stack.

Referencing the stack frame layout examples provided earlier, we can see that this out-of-bounds write on the stack can lead to the corruption of sensitive stack data, specifically the saved frame pointer and the return address.

So how can this lead to arbitrary code execution?

Stack information that is usually targeted by an attacker to gain code execution is the return address. Overwriting this, an attacker can redirect code execution to any location in memory in which the attacker has write access. Historically, the first in-depth article that demonstrates using a stack buffer overflow to gain arbitrary code execution is Smashing The Stack For Fun And Profit by Aleph One.

As smashing the stack became more popular, various mitigations were implemented

to protect sensitive information on the stack from being overwritten, e.g.

stack canaries. Another mitigation involves setting permissions for segments of

memory within a process, and setting the permissions of the stack to be

read/write only - preventing the execution of shellcode stored in the stack.

These mitigations led to the creation of the ret2* techniques and return

oriented programming (ROP).

Other important stack information that can be targeted is the stack pointer and the saved frame pointer. These values are used to conduct relative addressing of variables within the stack frame, their corruption can be leveraged to complete a "write-what-where" condition. Corruption of the stack pointer and saved frame pointer can also be used to conduct a stack pivot, allowing the attacker to control the location and contents of the stack frame used by the calling function when it resumes execution.

References

- https://web.archive.org/web/20130225162302/http://www.cs.umd.edu/class/sum2003/cmsc311/Notes/Mips/stack.html

- http://asm.sourceforge.net/articles/startup.html

- https://www.agner.org/optimize/calling_conventions.pdf

- http://jdebp.eu./FGA/function-perilogues.html

- https://cwe.mitre.org/data/definitions/787.html

- https://cwe.mitre.org/data/definitions/121.html

- http://phrack.org/issues/49/14.html

Heap buffer overflow

What is a heap?

Heaps are contiguous blocks of memory chunks which malloc() allocates

to a process. Heaps are dynamic in nature, so memory can also be free()d

by a process when the memory is no longer needed. Heap memory is global and

can be accessed and modified from anywhere within the process when referenced

with a valid pointer. Heaps are treated differently depending on whether

they belong to the main arena or not - more on arenas later.

Heaps can be created, extended, trimmed, and destroyed. The main arena

heap is created during the first request for dynamic memory while heaps for

other arenas are created with the new_heap() function. The main arena

heap grows and shrinks with the use of the brk() or sbrk() system calls.

These system calls are used to change the location of the program break,

defining the end of the process's data segment. Increasing the

program break allocates memory to the process; decreasing the break

deallocates memory. [1]

What is a chunk?

Chunks are the fundamental unit of memory that malloc() deals in, they are

pieces of heap memory. An allocated chunk in the heap is structured like this:

word/qword | word/qword

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| prev size | chunk size |A|M|P|

| (not used while allocated) | |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| user data | user data |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| user data | size of next chunk |A|M|P|

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

The flags at the end of the second word/qword represent these chunk

properties:

- A (

NON_MAIN_ARENA) -0for chunks in the main arena. Each thread spawned receives its own arena and for those chunks, this bit is set. - M (

IS_MMAPPED) - The chunk was obtained usingmmap(). - P (

PREV_INUSE) -0when the previous chunk is free. The first chunk allocated will always have this bit set. [3]

The minimum usable chunk size is 0x20, and chunk sizes increase in

increments of 16 bytes: 0x20, 0x30, 0x40, etc.

The top chunk

The top chunk is the "topmost available chunk, i.e. the one bordering the end

of available memory". Requests are only serviced from a top chunk when they

can't be serviced from any other bins in the same arena. malloc() keeps

track of the remaining memory in a top chunk using its chunk_size field,

and the PREV_INUSE bit of a top chunk is always set. A top chunk always

contains enough memory to allocate a minimum-sized chunk and always ends on a

page boundary. [4]

What is an arena?

malloc() administrates a process's heaps using malloc_state structs, or

arenas. Arenas contain bins, data strucutures used for recycling

free chunks of heap memory. Definitions for each type of bin can be found

below:

fastbins

- A collection of singly linked, non-circular lists that each hold free chunks of a specific size.

- The first

word/qwordof a chunk's user data is used as the forward pointer (fd) when linked into a fastbin. - fastbins are LIFO data structures.

- Free chunks are linked into the fastbin if the tcachebin is full.

- For memory requests, the fastbin is searched after the tcachebin and before any other bin.

- There are 10 fastbins with chunk sizes:

16..88bytes. [2]

unsortedbin

- A doubly linked, circular list that holds free chunks of any size; essentially used to optimize resource requests.

- Free chunks are linked directly into the head of an unsortedbin when the tcachebin is full or they are outside tcachebin size range.

- The first

word/qwordof a chunk's user data is used as the forward pointer (fd) and the secondword/qwordis used as a backwards pointer (bk) when linked into an unsortedbin.- In versions of GLIBC compiled without the tcachebin, free chunks are linked directly into the head of an unsortedbin when they are outside fastbin range.

- The unsortedbin is searched after the tcachebin, fastbin, and smallbins when the request size fits those ranges, but before the largebins.

- unsortedbin searches begin from the back and move towards the front.

- If a request fits a chunk within the unsortedbin, the search is stopped and the memory is allocated, otherwise the chunk is sorted into a smallbin or largebin. [2]

smallbins

- A collection of doubly linked, circular lists that each hold free chunks of a specific size.

- Free chunks are only linked into a smallbin when the chunk's arena sorts the unsortedbin.

- The first

word/qwordof a chunk's user data is used as the forward pointer (fd) and the secondword/qwordis used as a backwards pointer (bk) when linked into a smallbin. - smallbins are FIFO data structures.

- For memory requests, smallbins are searched after the tcachebin, after fastbins if the request was in fastbin size range, and before any other bins are searched if the request was in smallbin range.

- There are 62 smallbins with chunk sizes:

16..512bytes (32-bit),16..1024bytes (64-bit). [2]

largebins

- A collection of doubly linked, circular lists that each hold free chunks within a range of sizes.

- Free chunks are only linked into a largebin when the chunk's arena sorts the unsortedbin.

- largebins are maintained in descending size order.

- The largest chunk is accessible via the bin's

fdpointer. - The smallest chunk is accessible via the bin's

bkpointer.

- The largest chunk is accessible via the bin's

- The first

word/qwordof a chunk's user data is used as the forward pointer (fd) and the secondword/qwordis used as a backwards pointer (bk) when linked into a largebin. - The first chunk of its size linked into a largebin has the third and fourth

word/qwords repurposed asfd_nextsizeandbk_nextsize, respectively. - The nextsize pointers are used to form another doubly linked, circular list holding the first chunk of each size linked into that largebin. Subsequent chunks of the same size are added after the first chunk of that size.

- The nextsize pointers are used as a skip list.

- For memory requests, largebins are searched after an unsortedbin, but before a binmap search.

- There are 63 largebins with chunk sizes starting at:

512bytes (32-bit),1024bytes (64-bit). [2]

What is a tcache?

A tcache behaves like an arena but, unlike arenas, is not shared between threads of a process. They are created by allocating space on a heap belonging to their thread's arena and are freed when the thread exits. The purpose of a tcache is to relieve thread contention for malloc's resources by allocating to each thread its own collection of chunks.

- The tcache is present in GLIBC versions >= 2.26.

- A tcache takes the form of a

tcache_perthreadstruct which holds the heads of 64 tcachebins preceded by an array of counters which record the number of chunks in each tcachebin. - tcachebins are singly linked, non-circular lists of free chunks of a specific size.

- When a tcachebin reaches it's maximum number of chunks, free chunks of that bin's size are instead treated as they would be without a tcache present.

- There are 64 tcachebins with chunk sizes:

12..516bytes (32-bit),24..1032bytes (64-bit). [2]

Heap overflow

OK, so all of the previous information was great right? It may seem like a lot, but understanding the intricacies of the GLIBC Heap is important to effectively exploit it. Now, I'll finally define what a heap overflow is:

"A heap overflow is a buffer overflow, where the buffer that can be overwritten is allocated in the heap portion of memory, generally meaning that the buffer was allocated using a routine such as malloc()." [5]

Here's an example of code that presents a heap overflow vulnerability:

#define BUFSIZE 256

int main(int argc, char **argv) {

char *buf;

buf = (char *)malloc(sizeof(char)*BUFSIZE);

strcpy(buf, argv[1]);

}

No bounds checking is conducted on argv[1] before its data is copied into

buf. strcpy() could possibly copy data from argv[1] well past the bounds

of the chunk that was allocated on the heap to hold buf.

Heap overflows are a dangerous weakness for a an application to have and, given the right circumstances, attackers can utilize a variety of techniques to gain arbitrary code execution. Techniques to exploit heap overflows have been studied since the early 2000's and we'll cover a number of these techniques in the following sections.

References

- https://www.blackhat.com/presentations/bh-usa-07/Ferguson/Whitepaper/bh-usa-07-ferguson-WP.pdf

- https://azeria-labs.com/heap-exploitation-part-2-glibc-heap-free-bins/

- https://heap-exploitation.dhavalkapil.com/diving_into_glibc_heap/malloc_chunk.html

- https://azeria-labs.com/heap-exploitation-part-1-understanding-the-glibc-heap-implementation/

- https://cwe.mitre.org/data/definitions/122.html

House of Force

This is a pretty straight-foward and easy technique to understand. To utilize the House of Force technique to gain code execution, an attacker needs the following [1]:

- Ability to conduct a heap overflow to overwrite the

top chunksize field. - Conduct a

malloc()call with an attacker-controlled size. - Conduct a final

malloc()call where the attacker controls the user data.

And the House of Force technique follows these steps:

- Attacker uses a heap buffer overflow to overwrite the

top chunksize field, usually something like0xffffffffffffffff==-1.- Because the

top chunksize field is nowULLONG_MAX, calls tomalloc()can be arbitrarily large and thetop chunkwill be offset by the size of the call, allowing the attacker to place thetop chunkpointer anywhere in memory .

- Because the

- Attacker requests a chunk of size

x, placing thetop chunkdirectly before the target that the attacker intends to write to. - Attacker uses a final allocation to create a chunk with its user data overlapping the target.

What are some good targets to overwrite?

Typically, attackers will attempt to write to these glibc symbols:

__malloc_hook__free_hook

The __malloc_hook and __free_hook symbols are used by glibc to allow

programmers the ability to register functions that will be executed when calls

to malloc() or free() are made. These can be used by a programmer to

acquire statistics, install their own versions of these functions, etc.

Attackers use these hooks to redirect program execution to memory that they

control within the program. For instance, an attacker could write the address

of their ROP chain into either hook, force the program to call malloc() or

free(), and then begin executing their ROP chain to conduct a stack pivot,

etc. It's also possible to overwrite either hook to point to a one_gadget or

system() - you get the picture.

Some other less common targets are:

- The

got.pltsection of the program - The process stack

Going after the Global Offset Table (GOT) to obtain arbitrary code execution is

only a viable option if full Relocation Read-Only (RELRO) [2]

is not enabled. Otherwise, the attacker would encounter a SIGSEV by trying to

write to memory that is not marked as writeable.

Going after the process stack is also a possibility - the attacker could try to

overwrite the return address of a stack frame if they know where it resides

in memory. Unfortunately, Address Space Layout Randomization (ASLR) makes this

difficult to achieve.

Patch

The House of Force went unpatched for 13 years, but an update was finally

made to glibc to check the size of the top chunk on 16 AUG 2018.

[3] The House of Force still works for glibc versions

less than 2.29.

References

- https://gbmaster.wordpress.com/2015/06/28/x86-exploitation-101-house-of-force-jedi-overflow/

- https://ctf101.org/binary-exploitation/relocation-read-only/

- https://sourceware.org/git/?p=glibc.git;a=commitdiff;h=30a17d8c95fbfb15c52d1115803b63aaa73a285c

House of Orange

The original House of Orange technique used a heap overflow vulnerability

to target the top chunk in its first stage of exploitation. Due to this fact,

we can determine that the House of Orange fits within this section of heap

exploitation.

The technique

The House of Orange is an interesting, but somewhat convoluted, technique to gain arbitrary code execution of a vulnerable process. The House of Orange is executed in three stages:

-

Leverage a heap overflow vulnerability to overwrite the size field of the

top chunk.- Overwrite the

top chunkwith a small size, foolingmalloc()in future requests to believe that thetop chunkis smaller than it actually is. - The new

top chunksize must be page-aligned and theprev_inusebit must be set in order to passmalloc()checks. - The attacker forces the program to make another

malloc()call with a size larger than what is currently written to thetop chunksize field. - This

malloc()call will causemalloc()tommap()a new segment of heap memory.malloc()will also determine that the new segment of heap memory and thetop chunkare not contiguous, causingmalloc()tofree()the remaining space of thetop chunk. - This newly free chunk will be too large for the fastbin, it will be linked into the unsortedbin.

- Overwrite the

-

Use the same heap overflow vulnerability and chunk to overwrite the newly freed

top chunkthat resides in the unsortedbin.- The attacker forges the metadata for a fake chunk, setting the chunk size

to

0x61, and setting thebkpointer to a chunk that overlaps_IO_list_allinglibc. - The attacker will use this to conduct an Unsortedbin Attack, writing

the memory address of the unsortedbin head in the

main arenato_IO_list_all. - The attacker uses the heap overflow to write a fake

_IO_FILEstruct into the heap, forging avtable_ptrthat points back into attacker controlled memory with the intent of overwriting theoverflowmethod of the struct tosystem().

- The attacker forges the metadata for a fake chunk, setting the chunk size

to

-

The attacker requests a chunk smaller than the forged chunk that was just created, causing

malloc()to sort the free chunk into the smallbin, triggering an Unsortedbin Attack.malloc()attempts to follow our forgedbk, however, chunk metadata checks will causemalloc()to call__malloc_printerr(), leading to aSIGABRT.- When the program begins its exit procedures, it attempts to clear the buffers of all open file streams, including our forged one.

glibcfollows_IO_list_allwhich now points to themain_arena. Themain_arenafails_IO_FILEstruct checks, andglibcmoves on to the next_IO_FILEstruct pointed to by themain_arena's fakechainmember - our smallbin.glibcinspects the forged_IO_FILEstruct the attacker created in the heap using a heap overflow and executes theoverflowmethod listed in thevtableof the forged_IO_FILEstruct. The attacker has overwritten theoverflowmethod listed in thevtableto point tosystem().- The address of the

_IO_FILEis passed to this call tosystem()- the attacker ensures that the string/bin/sh\0resides at this location, the very first word of bytes in the forged_IO_FILEstruct.

Some notes

Like I said, this technique is convoluted. The attacker needs to have the following in order to exploit this vulnerability:

- Ability to edit chunk data

- Ability to control

malloc()allocation size - Heap and

glibcaddress leak ifASLRis enabled - Heap overflow

Patch

There doesn't seem to be any specific patch that attempts to mitigate

exploitation using the House of Orange. Because there are so many

conditions necessary to effectively exploit this technique, the summation of

the mitigations applied to glibc over the years have made this technique

obsolete.

For instance, the patch applied to actually check the validity of the bk

pointer in the unsortedbin causes the Unsortedbin Attack used to execute

this technique to fail. In addition, glibc after version 2.28 no longer

traverses through all open file streams at program exit to call the overflow

method of the stream. This mitigation thwarts this techniques use of

File Stream Oriented Programming to gain code execution.

References

- https://4ngelboy.blogspot.com/2016/10/hitcon-ctf-qual-2016-house-of-orange.html

- https://ctf-wiki.github.io/ctf-wiki/pwn/linux/io_file/fsop/

Single Byte Overflows

Single byte overflows are pretty dangerous bugs to have in a program, given the

right conditions. A stack-based, single byte, NULL buffer overflow was

demonstrated in this this post in

1998, used to overwrite the LSB of the ebp causing the stack frame to be

relocated to a lower address in memory - a location in the stack that the user

controls and can forge a fake stack frame.

In 2014, Google Project Zero releases The poisoned NUL byte, 2014 edition,

demonstrating that a heap-based, single byte, NULL buffer overflow can also

be used to gain code execution. [2]

Techniques

The following subsections cover a white paper from Accenture Security that goes in-depth on different methods of exploiting a one byte heap-based buffer overflow to gain overlapping chunks and possibly code execution. [3]

Extending free chunks

A diagram for this technique can be found in section 3.2.1 of

[3]. With a one byte heap-based buffer overflow, the attacker

will write actual information to the size field of a free chunk, increasing

its size. An allocation larger than the corrupted chunk's original size will

cause the chunk to overlap into succeeding chunks.

This scenario relies on the fact that malloc() does not check the prev_size

field of the succeeding chunk when allocating a previously free()d chunk.

Extending allocated chunks

This technique is similar to the one above, it's just that the series of

operations is reordered. A diagram for this technique can be found in section

3.2.2 of [3]. Essentially, the corruption happens before the

victim, corrupted chunk is free()d. An attacker writes one byte, increasing

the size of the corrupted chunk. After the corrupted chunk is free()d,

another allocation is requested with a size greater than the original size

of the corrupted chunk, causing the corrupted chunk's user data to overlap

the succeeding chunk.

This technique exploits the fact that free() has no ability to determine if

the corrupted chunk's size field is supposed to be larger or smaller, as the

only location that contains the chunk's size metadata is the size field

corrupted by the one byte heap-based buffer overflow.

Shrinking free chunks

Here's our heap-based, single byte, NULL buffer overflow - a memory

corruption vulnerability that can lead to some interesting outcomes. A good

demonstration of this technique exists on the how2heap repo,

here. The diagram for this technique can be found in section

3.2.3 of [3].

The initial state of the program involves having three chunks allocated on the

heap, all too large for the fastbin. The chunk in the middle of these three

will have a size such that a NULL byte buffer overflow from the preceding

chunk will overwrite the size field of the middle chunk, causing it to shrink

in size. The example provided sets the middle chunk's size to 0x210 and,

after the NULL byte overflow, its size is set to 0x200.

Before the memory corruption occurs, the middle chunk is free()d. This is

required because the prev_size field of the succeeding chunk must be set to

0x210. The attacker conducts the NULL byte buffer overflow and sets the

free chunk's size to 0x200. In later updates to glibc, the prev_size

field and the size field of the chunk about to be backwards consolidated is

now checked for consistency. Attackers must now write a valid prev_size field

to the succeeding chunk before attempting this backwards consolidation.

Two chunk are now allocated from this newly free()d space, one chunk that's

not within fastbin range, and subsequent chunks that are. We want chunks that

are in fastbin range to avoid having these chunks subjected to malloc()'s

consolidation and sorting behavior. We free the first chunk of the two chunks

we just allocted, placing it in the unsortedbin.

Almost there, the attacker frees the third chunk of the original three, causing

free() to inspect the prev_size field of the third chunk and consolidating

it with the chunk that we just free()d into the unsortedbin. This free space

now overlaps the fastbin sized chunk we allocated earlier.

Finally, we allocate a chunk large enough to overlap the fastbin sized chunk

that still remains. Because of this heap-based, one byte, NULL buffer

overflow, we have allocated two chunks that overlap one another which can

easily lead to the implementation of other exploitation primitives.

Exploitation

In [3], further dicussion is provided on how to use overlapping chunks to leak sensitive information and gain code exeuction. In section 4.3.2, the authors demonstrate that they can create overlapping unsortedbin size chunks.

They free() the unsortedbin eligible chunk, and the pointer to the head of

the unsortedbin, which resides in the main_arena, is written to the fd and

bk pointers of the chunk. Because they still maintain a chunk overlapping

this now free chunk, they can leak the memory of the free chunk to obtain its

fd and bk pointers, providing them a glibc leak.

Not covered in this white paper, but possible nonetheless, is that the

allocation and free()ing of two fastbin eligible chunks can lead to a heap

location leak. If these two fastbin eligible chunks are free()d while being

overlapped by a much larger, allocated chunk, the attacker could feasibly read

the fd pointers of these fastbin free chunks to derive the base of the heap.

Finally, to gain code execution, the authors of this white paper use a Global

Offset Table (GOT) overwrite. For the application they are exploiting, the

program maintains sensitive structures within the heap and, within these

structures, there are pointers that will be used to write incoming data from

a socket connection. The authors generate two of these structures in the heap,

one to leak glibc and one to conduct arbitrary writes. The authors use the

glibc leak to derive the location of the GOT - for this application's

environment, the GOT is always a fixed offset away from the glibc base. Once

they've derived the location of free@GOT, they corrupt a heap structure's

write pointer to point to free@GOT. After this, they send a message which

causes the program to overwrite free@GOT with the pointer to system@libc.

Coercing the program to call free@plt leads to system@libc with a command

they provide.

Patch

A patch that thwarts the shrinking of chunks to gain overlapping chunks was

implemented on AUG 2018. This is referenced by the note earlier, as this patch

conducts a consistency check between the next->prev_size and victim->size

of a victim within the unsortedbin before sorting or consolidating the

victim. [5]

References

- https://seclists.org/bugtraq/1998/Oct/109

- https://googleprojectzero.blogspot.com/2014/08/the-poisoned-nul-byte-2014-edition.html

- https://www.contextis.com/en/resources/white-papers/glibc-adventures-the-forgotten-chunks

- https://github.com/shellphish/how2heap/blob/master/glibc_2.23/poison_null_byte.c

- https://sourceware.org/git/?p=glibc.git;a=commitdiff;h=b90ddd08f6dd688e651df9ee89ca3a69ff88cd0c

Use-after-free (UAF)

The Use-after-free vulnerability can be defined as the use of heap allocated memory after it has been freed or deleted. [1] This can lead to undefined behavior by the program and is commonly used by attackers to implement a Write-what-where condition. [2]

Double frees and UAF vulnerabilities are closely related, and double frees

can be used to duplicate chunks in the fastbin, eventually allowing the

attacker to acquire a pointer to free memory. [3]

Heap overflows can also lead to a UAF vulnerability, given the right

conditions. This is discussed further in the exploitation portion of

Single Byte Overflows as we leak glibc

addresses from an unsortedbin chunk using our overlapping chunk.

Provided below is an example of a UAF from OWASP.org [4]:

#include <stdio.h>

#include <unistd.h>

#define BUFSIZER1 512

#define BUFSIZER2 ((BUFSIZER1/2) - 8)

int main(int argc, char **argv) {

char *buf1R1;

char *buf2R1;

char *buf2R2;

char *buf3R2;

buf1R1 = (char *) malloc(BUFSIZER1);

buf2R1 = (char *) malloc(BUFSIZER1);

free(buf2R1);

buf2R2 = (char *) malloc(BUFSIZER2);

buf3R2 = (char *) malloc(BUFSIZER2);

strncpy(buf2R1, argv[1], BUFSIZER1-1);

free(buf1R1);

free(buf2R2);

free(buf3R2);

}

The following sections, Fastbin Dup and Unsortedbin Attack, demonstrate how UAF vulnerabilities can be leveraged to gain arbitrary code execution.

References

- https://cwe.mitre.org/data/definitions/416.html

- https://cwe.mitre.org/data/definitions/123.html

- https://cwe.mitre.org/data/definitions/415.html

- https://owasp.org/www-community/vulnerabilities/Using_freed_memory

Fastbin Dup

While the Fastbin Dup technique can be implemented using a heap buffer overflow , the most common example used to demonstrate this technique is by using the Use-after-free (UAF) and Double Free vulnerabilities.

The technique

The steps to execute the Fastbin Dup technique are as follows:

- Leverage a Double Free vulnerability to free a victim chunk twice - this chunk must be small enough that it gets linked into the fastbin.

- Coerce the program to execute

malloc()to allocate the victim chunk from the fastbin. - Overwrite the

fdpointer of the victim chunk to point to a fake chunk that overlaps the location of our arbitrary write target.- Since the inception of this technique, there have been some

glibcmitigations implemented to check if the next chunk in the fastbin contains a valid size field. You can find fake chunk candidates near your target write location using thefind_fake_fastcommand withpwndbg.

- Since the inception of this technique, there have been some

- Coerce the program to execute

malloc()until we receive a pointer to the same victim chunk, however, this time we don't need to do anything with the user data. The chunk overlapping our write target now exists within the fastbin. - Coerce the program to execute

malloc()one last time, providing us a pointer to the chunk overlapping our write target. - Use the pointer to the fake chunk to conduct our arbitrary write.

Write targets

So what targets do we wish to write to? Well, the usual candidates are: the

__free_hook, __malloc_hook, or the Global Offset Table (GOT). Other

techniques also target the stack which is a viable option if you can accurately

determine where the return address of the current function is located. After we

gain the ability to conduct an arbitrary write, gaining code execution should

be trivial with any other technique.

Requirements

An attacker using the Fastbin Dup must have these conditions present:

- Have chunks that are in the fastbin

- Have a UAF vulnerability with the ability to control the data written to the free chunk

- Have the ability to control the data written to the chunk that overlaps the

write target (

__free_hook, etc.) - Have a memory leak that allows the attacker to defeat ASLR if enabled

Patch

Unfortunately, the researchers at Checkpoint Research proposed protections

for the fd pointers used to implement the linked list data structures of the

fastbin and tcache. [2] To prevent attackers from leveraging the

Fastbin and Tcache Dup techniques, these researchers implemented the

PROTECT_PTR and REVEAL_PTR macros for the fd pointer of the fastbin and

tcache singly-linked lists.

Summarizing the implementation of these macros, the fd pointer of the fastbin

and tcache are mangled using the random bits of the virtual memory address

currently holding the fd pointer. The macro definitions are as follows

[3]:

#define PROTECT_PTR(pos, ptr) \

((__typeof (ptr)) ((((size_t) pos) >> 12) ^ ((size_t) ptr)))

#define REVEAL_PTR(ptr) PROTECT_PTR (&ptr, ptr)

These mitigations make the Fastbin Dup technique significantly harder to pull

off. Now, in order to forge a fd pointer, the attacker has to leak

sensitive information from the process to derive the location of their

arbitrary write target in memory. They must use that same address to mangle

their forged fd pointer before writing it to the victim chunk. The final

nail in the coffin, however, is the fact that the pointer must be page-aligned.

This, coupled with checks done by glibc to verify the size field of our fake

chunk overlapping our write target, invalidates our ability to use the above

technique to overwrite something like the __malloc_hook to gain control of

the RIP.

References

- https://github.com/shellphish/how2heap/blob/master/glibc_2.31/fastbin_dup.c

- https://research.checkpoint.com/2020/safe-linking-eliminating-a-20-year-old-malloc-exploit-primitive/

- https://sourceware.org/git/?p=glibc.git;a=commitdiff;h=a1a486d70ebcc47a686ff5846875eacad0940e41

Unsortedbin Attack

The Unsortedbin Attack and the Use-after-free (UAF) vulnerability are loosely related enough for me to write about it in this section. The Unsortedbin Attack can be used if you have a heap overflow vulnerability, as shown in the House of Orange, however, for that version of the technique to work some special conditions need to be present. For the purpose of this discussion, we're only going to concern ourselves with the Unsortedbin Attack implemented with a Use-after-free (UAF) vulnerability.

How it works

This technique is actually pretty simple. To leverage this attack, the attacker needs to be able to:

- Acquire a chunk on the heap that is too large for the fastbin

- Acquire another chunk after the previously mentioned chunk that acts as a fencepost between the previously mentioned chunk and the top chunk.

- Free the first chunk.

- Edit the first chunk (UAF vulnerability).

- Execute another

malloc(), requesting a chunk of the same size as the one that wasfree()'d and linked into the Unsortedbin.

So what happens here?

The first chunk on the heap is too large for the fastbin so, when it is

free()'d, it is linked into the Unsortedbin. This free chunk will be linked

into a small or largebin, based upon its size, during the next call to

malloc(). We create a buffer chunk between this previously mentioned chunk

and the top chunk prior to trying to free the first chunk, otherwise our

victim chunk will be consolidated into the top chunk.

With our Use-after-free (UAF) vulnerability, we overwrite the bk pointer of

the chunk in the Unsortedbin to point to an address that we want to write to.

What are we writing? Well, when an Unsortedbin chunk is chosen to service a

malloc() call, the memory address of the main_arena that contains the head

of the Unsortedbin is written to where the bk points to.

How is this used for exploitation?

Honestly, the Unsortedbin Attack is often used to enable other techniques. It's usually a precursor to further exploitation but, by itself, it's not useful for gaining code execution.

A great example technique that leverage the Unsortedbin Attack to gain code

execution is the House of Orange. In that example,

however, the vulnerability leveraged to overwrite the bk of the chunk in the

Unsortedbin is a heap overflow.

Another good example is the zerostorage challenge from 0ctf 2016

. In this challenge, the attacker uses a UAF vulnerability to overwrite the

bk of a chunk in the Unsortedbin and then forces an allocation of this chunk

to overwrite the symbol libc.global_max_fast. global_max_fast represents

the largest size a fastbin chunk can be - overwriting it with the memory

address that contains the Unsortedbin head, making the size of fastbin chunks

almost arbitrarily large.

Using the above, the attacker now has the ability to create fastbin chunks and,

because a UAF vulnerability is present, the attacker can leverage the Fastbin